Snowflake Schema Detection Feature for CSV Files

Check out my courses in Udemy. Popular courses include building utility using Snowpark Python API to ingest CSV data as well as JSON data automatically without writing any DDL/DML statement and save 95% of manual effort.

Introduction

Data loading processes are crucial for timely insights and decision-making. Snowflake, has add its support for CSV & JSON using “Infer Schema” table function that streamlines the process of loading data from CSV files into Snowflake tables. We will explore the importance of this feature and how it empowers data engineers and analysts to expedite data loading while ensuring accuracy and consistency.

Importance of Infer Schema in Snowflake

Traditionally, defining table structure (DDL) while loading data can be a manual and error-prone task, especially when dealing with large datasets. With Snowflake’s “Infer Schema” feature for CSV and JSON, this tedious process is simplified. The feature automatically reads a CSV file from a specified stage location(s) and intelligently infers column names and data types based on the CSV file’s contents. This automation not only saves valuable time and effort but also reduces the risk of schema mismatches, ensuring data integrity throughout the data pipeline.

How to Use Infer Schema in Snowflake

To utilize this powerful feature, data practitioners need to follow a few simple steps.

- Ensure that the CSV file is staged in Snowflake with appropriate permissions.

- Next, create a new table using the “CREATE TABLE” statement, and instead of specifying explicit column names and data types, use “INFER SCHEMA” as the column definition.

- Snowflake will automatically analyze the CSV data file(s) and create the table DDL accordingly.

Watch the video below to see the Infer Schema feature in action and discover how it can efficiency into your data loading workflows

Data Files

Download the CSV data files from this git location.

Part-1 : CSV Infer Schema SQL Construct

order_data_10.csv

order_id,order_date,order_time,product_name,order_price,tax_value,existing_customer,customer_name,mobile_number

1,2023-06-04,13:19:14,Moto G Power,956.27,95.63,False,Robert Sherman,9152218975

2,2023-06-19,06:44:23,Nokia 8.3,658.97,65.9,True,Raymond Phillips,9872334088

3,2023-04-08,05:11:22,Moto G Power,1487.21,148.72,False,Thomas Thompson,9513069603

4,2023-06-06,03:33:53,iPhone 12,1310.52,131.05,True,Casey Mcdaniel,9620517344

5,2023-02-12,17:30:23,Galaxy Note 10,845.79,84.58,False,Jennifer Griffin,9045335526

6,2023-02-07,15:41:14,iPhone 12,1351.61,135.16,False,Dr. Michele Huang,9377330463

7,2023-06-15,12:10:09,iPhone SE,593.82,59.38,False,Timothy Guerrero,9785992101

8,2023-01-01,00:33:04,Galaxy Note 10,1334.09,133.41,False,Eric Hawkins,9324423643

9,2023-01-26,16:47:11,iPhone SE,1051.47,105.15,False,Sara Williams,9854478422

10,2023-05-21,00:11:13,Galaxy S21,687.52,68.75,False,Donna Velez,9876003384-- setting context for this worksheet.

use role sysadmin;

use database demo;

use schema sales;

use warehouse compute_wh;

select * from table(

infer_schema(

location=>'@order_data/csv/records=10/order_data_10.csv',

file_format=>'my_csv_format'

)

);

list @order_data;

create or replace file format my_file_format_01

type = 'csv'

compression = 'auto'

field_delimiter = ','

record_delimiter = '\n'

field_optionally_enclosed_by = '\042'

skip_header = 1;

desc file format my_file_format_01;

select t.$1,t.$2,t.$3,t.$4,t.$5,t.$6,t.$7,t.$8,t.$9

from @order_data/csv/records=10/order_data_10.csv

(file_format => my_file_format_01) t;

select *

from table(

infer_schema(

location=>'@order_data/csv/records=10/order_data_10.csv',

file_format=>'my_file_format_01'

)

);

select

current_region() as SF_Hosted_Region,

current_version() as Snowflake_Version;

create table my_order_tbl

using template (

select array_agg(object_construct(*))

from table(

infer_schema(

location=>'@order_data/csv/records=10/order_data_10.csv',

file_format=>'my_csv_format'

)

));

desc table my_order_tbl;

select * from my_order_tbl;Part-02 SQL Scripts — Performance

create or replace file format my_file_format_02

type = 'csv'

compression = 'auto'

field_delimiter = ','

record_delimiter = '\n'

field_optionally_enclosed_by = '\042'

parse_header = true;

list @order_data/csv/records;

alter session set use_cached_result = false;

use warehouse wh01;

-- csv file having only 10 orders

select *

from table(

infer_schema(

location=>'@order_data/csv/records=10/order_data_10.csv',

file_format=>'my_file_format_02'

)

);

-- csv file having only 1000 orders

use warehouse wh02;

select *

from table(

infer_schema(

location=>'@order_data/csv/records=1000/order_data_1k.csv',

file_format=>'my_file_format_02'

)

);

-- csv file having only 10,000 orders

use warehouse wh03;

select *

from table(

infer_schema(

location=>'@order_data/csv/records=10000/order_data_10k.csv',

file_format=>'my_file_format_02'

)

);

-- csv file having only 1,000,000 orders

use warehouse wh04;

select *

from table(

infer_schema(

location=>'@order_data/csv/records=1000000/order_data_1M.csv',

file_format=>'my_file_format_02'

)

);

-- partition data and in infer-schema

list @order_data/partition-csv;

select *

from table(

infer_schema(

location=>'@order_data/partition-csv',

file_format=>'my_file_format_02'

)

);

Part-03 SQL Scripts Data Types

order_data_10_array.csv

order_id,order_date,order_time,product_name,order_price,tax_value,existing_customer,customer_name,mobile_number

1,2023-06-04,13:19:14,Moto G Power,956.27,[95.63,False,Robert Sherman,[9152218975,1234567890]

2,2023-06-19,06:44:23,Nokia 8.3,658.97,65.9,True,Raymond Phillips,[9872334088,1234567890]

3,2023-04-08,05:11:22,Moto G Power,1487.21,148.72,False,Thomas Thompson,[9513069603,1234567890]

4,2023-06-06,03:33:53,iPhone 12,1310.52,131.05,True,Casey Mcdaniel,[9620517344,1234567890]

5,2023-02-12,17:30:23,Galaxy Note 10,845.79,84.58,False,Jennifer Griffin,[9045335526,1234567890]

6,2023-02-07,15:41:14,iPhone 12,1351.61,135.16,False,Dr. Michele Huang,[9377330463,1234567890]

7,2023-06-15,12:10:09,iPhone SE,593.82,59.38,False,Timothy Guerrero,[9785992101,1234567890]

8,2023-01-01,00:33:04,Galaxy Note 10,1334.09,133.41,False,Eric Hawkins,[9324423643,1234567890]

9,2023-01-26,16:47:11,iPhone SE,1051.47,105.15,False,Sara Williams,[9854478422,1234567890]

10,2023-05-21,00:11:13,Galaxy S21,687.52,68.75,False,Donna Velez,[9876003384,1234567890]order_data_10_json.csv

order_id,order_date,order_time,product_name,order_price,tax_value,existing_customer,customer_name,mobile_number

1,2023-06-04,13:19:14,Moto G Power,956.27,95.63,False,Robert Sherman,'{"mobile": "9152218975", "desk": "12345678"}'

2,2023-06-19,06:44:23,Nokia 8.3,658.97,65.9,True,Raymond Phillips,'{"mobile": "9152218975", "desk": "12345678"}'

3,2023-04-08,05:11:22,Moto G Power,1487.21,148.72,False,Thomas Thompson,'{"mobile": "9152218975", "desk": "12345678"}'

4,2023-06-06,03:33:53,iPhone 12,1310.52,131.05,True,Casey Mcdaniel,'{"mobile": "9152218975", "desk": "12345678"}'

5,2023-02-12,17:30:23,Galaxy Note 10,845.79,84.58,False,Jennifer Griffin,'{"mobile": "9152218975", "desk": "12345678"}'

6,2023-02-07,15:41:14,iPhone 12,1351.61,135.16,False,Dr. Michele Huang,'{"mobile": "9152218975", "desk": "12345678"}'

7,2023-06-15,12:10:09,iPhone SE,593.82,59.38,False,Timothy Guerrero,'{"mobile": "9152218975", "desk": "12345678"}'

8,2023-01-01,00:33:04,Galaxy Note 10,1334.09,133.41,False,Eric Hawkins,'{"mobile": "9152218975", "desk": "12345678"}'

9,2023-01-26,16:47:11,iPhone SE,1051.47,105.15,False,Sara Williams,'{"mobile": "9152218975", "desk": "12345678"}'

10,2023-05-21,00:11:13,Galaxy S21,687.52,68.75,False,Donna Velez,'{"mobile": "9152218975", "desk": "12345678"}'order_data_10_timestamp.csv

order_id,order_date,product_name,order_price,tax_value,existing_customer,customer_name,mobile_number

1,2023-06-04 13:19:14.109,Moto G Power,956.27,95.63,False,Robert Sherman,9152218975

2,2023-06-19 06:44:23.208,Nokia 8.3,658.97,65.9,True,Raymond Phillips,9872334088

3,2023-04-08 05:11:22.307,Moto G Power,1487.21,148.72,False,Thomas Thompson,9513069603

4,2023-06-06 03:33:53.406,iPhone 12,1310.52,131.05,True,Casey Mcdaniel,9620517344

5,2023-02-12 17:30:23.505,Galaxy Note 10,845.79,84.58,False,Jennifer Griffin,9045335526

6,2023-02-07 15:41:14.604,iPhone 12,1351.61,135.16,False,Dr. Michele Huang,9377330463

7,2023-06-15 12:10:09.703,iPhone SE,593.82,59.38,False,Timothy Guerrero,9785992101

8,2023-01-01 00:33:04.802,Galaxy Note 10,1334.09,133.41,False,Eric Hawkins,9324423643

9,2023-01-26 16:47:11.901,iPhone SE,1051.47,105.15,False,Sara Williams,9854478422

10,2023-05-21 00:11:13.999,Galaxy S21,687.52,68.75,False,Donna Velez,9876003384

-- data type - object

-- array

-- json

list @order_data/csv/records=10/order_data_10_array.csv; -- array

list @order_data/csv/records=10/order_data_10_json.csv; -- json

list @order_data/csv/records=10/order_data_10_timestamp.csv; -- timestamp

alter session set use_cached_result = false;

use warehouse wh01;

-- object data type

select *

from table(

infer_schema(

location=>'@order_data/csv/records=10/order_data_10_array.csv',

file_format=>'my_file_format_02'

)

);

-- json data type

select *

from table(

infer_schema(

location=>'@order_data/csv/records=10/order_data_10_json.csv',

file_format=>'my_file_format_02'

)

);

-- timestamp column type

select *

from table(

infer_schema(

location=>'@order_data/csv/records=10/order_data_10_timestamp.csv',

file_format=>'my_file_format_02'

)

);

Part-04 SQL Scripts

order_data_10_col_mismatch-case-1.csv

order_id,order_date,order_time,product_name,order_price,tax_value,existing_customer,customer_name,mobile_number

1,2023-06-04,13:19:14,Moto G Power,956.27,95.63,False,Robert Sherman

2,2023-06-19,06:44:23,Nokia 8.3,658.97,65.9,True,Raymond Phillips

3,2023-04-08,05:11:22,Moto G Power,1487.21,148.72,False,Thomas Thompson

4,2023-06-06,03:33:53,iPhone 12,1310.52,131.05,True,Casey Mcdaniel

5,2023-02-12,17:30:23,Galaxy Note 10,845.79,84.58,False,Jennifer Griffin

6,2023-02-07,15:41:14,iPhone 12,1351.61,135.16,False,Dr. Michele Huang

7,2023-06-15,12:10:09,iPhone SE,593.82,59.38,False,Timothy Guerrero

8,2023-01-01,00:33:04,Galaxy Note 10,1334.09,133.41,False,Eric Hawkins

9,2023-01-26,16:47:11,iPhone SE,1051.47,105.15,False,Sara Williams

10,2023-05-21,00:11:13,Galaxy S21,687.52,68.75,False,Donna Velezorder_data_10_col_mismatch-case-2.csv

order_id,order_date,order_time,product_name,order_price,tax_value,existing_customer,customer_name

1,2023-06-04,13:19:14,Moto G Power,956.27,95.63,False,Robert Sherman,9152218975

2,2023-06-19,06:44:23,Nokia 8.3,658.97,65.9,True,Raymond Phillips,9872334088

3,2023-04-08,05:11:22,Moto G Power,1487.21,148.72,False,Thomas Thompson,9513069603

4,2023-06-06,03:33:53,iPhone 12,1310.52,131.05,True,Casey Mcdaniel,9620517344

5,2023-02-12,17:30:23,Galaxy Note 10,845.79,84.58,False,Jennifer Griffin,9045335526

6,2023-02-07,15:41:14,iPhone 12,1351.61,135.16,False,Dr. Michele Huang,9377330463

7,2023-06-15,12:10:09,iPhone SE,593.82,59.38,False,Timothy Guerrero,9785992101

8,2023-01-01,00:33:04,Galaxy Note 10,1334.09,133.41,False,Eric Hawkins,9324423643

9,2023-01-26,16:47:11,iPhone SE,1051.47,105.15,False,Sara Williams,9854478422

10,2023-05-21,00:11:13,Galaxy S21,687.52,68.75,False,Donna Velez,9876003384

list @order_data/csv/records=10/order_data_10_col_mismatch-case-1.csv; -- 9 header vs 8 data fields

list @order_data/csv/records=10/order_data_10_col_mismatch-case-2.csv; -- 8 header vs 9 data fields

alter session set use_cached_result = false;

use warehouse wh01;

-- 9 header vs 8 data fields

select *

from table(

infer_schema(

location=>'@order_data/csv/records=10/order_data_10_col_mismatch-case-1.csv',

file_format=>'my_file_format_01'

)

);

desc file format my_file_format_01;

desc file format my_file_format_02;

select t.$1,t.$2,t.$3,t.$4,t.$5,t.$6,t.$7,t.$8,t.$9

from @order_data/csv/records=10/order_data_10_col_mismatch-case-1.csv

(file_format => my_file_format_01) t;

-- 8 header vs 9 data fields

select *

from table(

infer_schema(

location=>'@order_data/csv/records=10/order_data_10_col_mismatch-case-2.csv',

file_format=>'my_file_format_01'

)

);

select t.$1,t.$2,t.$3,t.$4,t.$5,t.$6,t.$7,t.$8,t.$9

from @order_data/csv/records=10/order_data_10_col_mismatch-case-2.csv

(file_format => my_file_format_01) t;

-- dont get confused.. and make sure, use proper file formats

-- double quot

select *

from table(

infer_schema(

location=>'@order_data/csv/records=10/order_data_10_all_quote.csv',

file_format=>'my_file_format_02'

)

);

select t.$1,t.$2,t.$3,t.$4,t.$5,t.$6,t.$7,t.$8,t.$9

from @order_data/csv/records=10/order_data_10_all_quote.csv

(file_format => my_file_format_01) t;

-- double quote and escape charaters...

select *

from table(

infer_schema(

location=>'@order_data/csv/records=10/order_data_10_quote.csv',

file_format=>'my_file_format_02'

)

);

select t.$1,t.$2,t.$3,t.$4,t.$5,t.$6,t.$7,t.$8,t.$9

from @order_data/csv/records=10/order_data_10_quote.csv

(file_format => my_file_format_01) t;

-- column names in ddl statement

create table my_order_tbl_01

using template (

select array_agg(object_construct(*))

from table(

infer_schema(

location=>'@order_data/csv/records=10/order_data_10_all_quote.csv',

file_format=>'my_file_format_02'

)

));

select get_ddl('table','my_order_tbl_01')Part-05 SQL Script

order_data_10_all_quote.csv

"order_id","order_date","order_time","product_name","order_price","tax_value","existing_customer","customer_name","mobile_number"

"1","2023-04-30","17:41:09","Nokia 8.3","291.09","29.11","False","Christina Garrett","9759437679"

"2","2023-01-13","11:22:40","OnePlus 9","678.22","67.82","True","Stephanie Flores","9119159206"

"3","2023-02-18","20:44:22","Pixel 5","1476.67","147.67","True","Christopher Johnson","9359736090"

"4","2023-07-11","09:53:46","iPhone 12","951.86","95.19","False","Sydney Wilson","9700131702"

"5","2023-04-18","22:11:08","iPhone SE","752.38","75.24","True","Chloe Jarvis","9645868562"

"6","2023-01-16","01:02:55","OnePlus 9","822.46","82.25","True","Michael Obrien V","9191187381"

"7","2023-04-17","21:44:38","Galaxy S21","1258.99","125.9","True","Nicholas Baker","9218283471"

"8","2023-06-02","20:08:29","Pixel 5","943.22","94.32","False","Jesse White","9541676867"

"9","2023-07-01","10:28:41","iPhone SE","1137.07","113.71","True","Jacob Ramirez","9141946204"

"10","2023-04-15","20:02:09","Moto G Power","1356.38","135.64","True","Joshua Carlson","9166630027"

list @order_data/csv/records=10/order_data_10_quote.csv;

-- create a table using template

create or replace temporary table my_order_tbl_01

using template (

select array_agg(object_construct(*))

from table(

infer_schema(

location=>'@order_data/csv/records=10/order_data_10_quote.csv',

file_format=>'my_file_format_02'

)

));

desc table my_order_tbl;

select get_ddl('table','my_order_tbl');

-- what happens if table already exist

-- can I create transient table

-- can I create temporary tablePart-06 SQL Scripts Input/Output

order_data_10_timestamp.csv

order_id,order_date,product_name,order_price,tax_value,existing_customer,customer_name,mobile_number

1,2023-06-04 13:19:14.109,Moto G Power,956.27,95.63,False,Robert Sherman,9152218975

2,2023-06-19 06:44:23.208,Nokia 8.3,658.97,65.9,True,Raymond Phillips,9872334088

3,2023-04-08 05:11:22.307,Moto G Power,1487.21,148.72,False,Thomas Thompson,9513069603

4,2023-06-06 03:33:53.406,iPhone 12,1310.52,131.05,True,Casey Mcdaniel,9620517344

5,2023-02-12 17:30:23.505,Galaxy Note 10,845.79,84.58,False,Jennifer Griffin,9045335526

6,2023-02-07 15:41:14.604,iPhone 12,1351.61,135.16,False,Dr. Michele Huang,9377330463

7,2023-06-15 12:10:09.703,iPhone SE,593.82,59.38,False,Timothy Guerrero,9785992101

8,2023-01-01 00:33:04.802,Galaxy Note 10,1334.09,133.41,False,Eric Hawkins,9324423643

9,2023-01-26 16:47:11.901,iPhone SE,1051.47,105.15,False,Sara Williams,9854478422

10,2023-05-21 00:11:13.999,Galaxy S21,687.52,68.75,False,Donna Velez,9876003384order_data_10_case_sensitive.csv

ORDER_ID,ORDER_DATE,ORDER_TIME,PRODUCT_NAME,ORDER_PRICE,TAX_VALUE,EXISTING_CUSTOMER,customer_name,MOBILE_NUMBER

1,2023-06-04,13:19:14,Moto G Power,956.27,95.63,False,Robert Sherman,9152218975

2,2023-06-19,06:44:23,Nokia 8.3,658.97,65.9,True,Raymond Phillips,9872334088

3,2023-04-08,05:11:22,Moto G Power,1487.21,148.72,False,Thomas Thompson,9513069603

4,2023-06-06,03:33:53,iPhone 12,1310.52,131.05,True,Casey Mcdaniel,9620517344

5,2023-02-12,17:30:23,Galaxy Note 10,845.79,84.58,False,Jennifer Griffin,9045335526

6,2023-02-07,15:41:14,iPhone 12,1351.61,135.16,False,Dr. Michele Huang,9377330463

7,2023-06-15,12:10:09,iPhone SE,593.82,59.38,False,Timothy Guerrero,9785992101

8,2023-01-01,00:33:04,Galaxy Note 10,1334.09,133.41,False,Eric Hawkins,9324423643

9,2023-01-26,16:47:11,iPhone SE,1051.47,105.15,False,Sara Williams,9854478422

10,2023-05-21,00:11:13,Galaxy S21,687.52,68.75,False,Donna Velez,9876003384

-- input and outputs of infer-schema function

-- create a table using template

select *

from table(

infer_schema(

location=>'@order_data/csv/records=10/order_data_10.csv',

file_format=>'my_file_format_02'

)

);

select *

from table(

infer_schema(

location=>'@order_data/csv/records=10/order_data_10_timestamp.csv',

file_format=>'my_file_format_02'

)

);

--order_data_10_timestamp.csv

-- case sensitivity of the column headers

select *

from table(

infer_schema(

location=>'@order_data/csv/records=10/order_data_10_case_sensitive.csv',

file_format=>'my_file_format_02'

,ignore_case => false

)

);

-- ,ignore_case => true

-- file names

select *

from table(

infer_schema(

location=>'@order_data/partition-csv',

files => '/part_1.csv',

file_format=>'my_file_format_02'

,ignore_case => true

)

);

Part-07 SQL Scripts

create or replace file format file_format_skip_header

type = 'csv'

compression = 'auto'

field_delimiter = ','

record_delimiter = '\n'

field_optionally_enclosed_by = '\042'

skip_header = 1;

create or replace file format file_format_parse_header

type = 'csv'

compression = 'auto'

field_delimiter = ','

record_delimiter = '\n'

field_optionally_enclosed_by = '\042'

parse_header = true;

-- file_format_parse_header and standard behaviour

select *

from table(

infer_schema(

location=>'@order_data/csv/records=10/order_data_10.csv',

file_format=>'file_format_parse_header'

)

);

select *

from table(

infer_schema(

location=>'@order_data/csv/records=10/order_data_10.csv',

file_format=>'file_format_skip_header'

)

);

select t.$1,t.$2,t.$3,t.$4,t.$5,t.$6,t.$7,t.$8,t.$9

from @order_data/csv/records=10/order_data_10.csv

(file_format => file_format_skip_header) t;

select t.$1,t.$2,t.$3,t.$4,t.$5,t.$6,t.$7,t.$8,t.$9

from @order_data/csv/records=10/order_data_10.csv

(file_format => file_format_parse_header) t;

-- the copy command

-- create a table and then run copy command using parse-header

create or replace table my_order_tbl

using template (

select array_agg(object_construct(*))

from table(

infer_schema(

location=>'@order_data/csv/records=10/order_data_10.csv',

file_format=>'file_format_parse_header'

)

));

copy into my_order_tbl from @order_data/csv/records=10/order_data_10.csv

FILE_FORMAT = (FORMAT_NAME = 'file_format_skip_header');

select * from my_order_tbl;

Part-08 SQL Scripts

-- we have already seen how to use using-teamplet to create tables

-- lets explore this sql construc more

select array_agg(object_construct(*))

from table(

infer_schema(

location=>'@order_data/csv/records=10/order_data_10.csv',

file_format=>'my_csv_format'

)

);

--array_agg(object_construct(*))

create table order_data_10

using template (

select array_agg(object_construct(*))

from table(

infer_schema(

location=>'@order_data/csv/records=10/order_data_10.csv',

file_format=>'my_csv_format'

)

));

-- 1M records

create table order_data_1M

using template (

select array_agg(object_construct(*))

from table(

infer_schema(

location=>'@order_data/csv/records=1000000/order_data_1M.csv',

file_format=>'my_file_format_02'

)

));

list @my_stagePart-09 — Infer Schema CSV Limitations

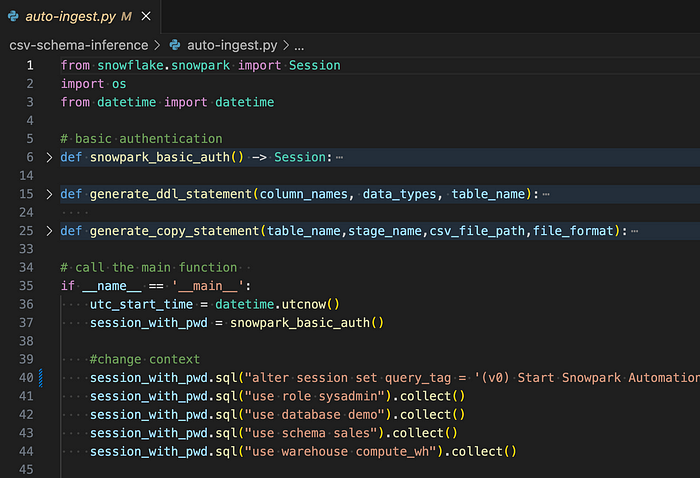

Part-10- Snowpark Python API Automation

Click on this link to access the Snowpark Python API automation code